King Cake Baby

I was in New Orleans last week at Tulane for Love Data Week 2026 where I gave my Data Art talk. But that’s not important. While I was there, I learned all about Mardi Gras traditions that I was completely unaware of, specifically: King Cakes. For some reason, these lunatics in New Orleans hide a plastic baby in these cakes and if you get the piece with the plastic baby you have to buy the next king cake. But even this doesn’t really matter. Here is what matters: during Mardi Gras some sporting events have this baby show up as a mascot. I now present to you King Cake Baby (sent to me by Lisa Dilks). It’s glorious and I love it with all my heart.

Cheers.

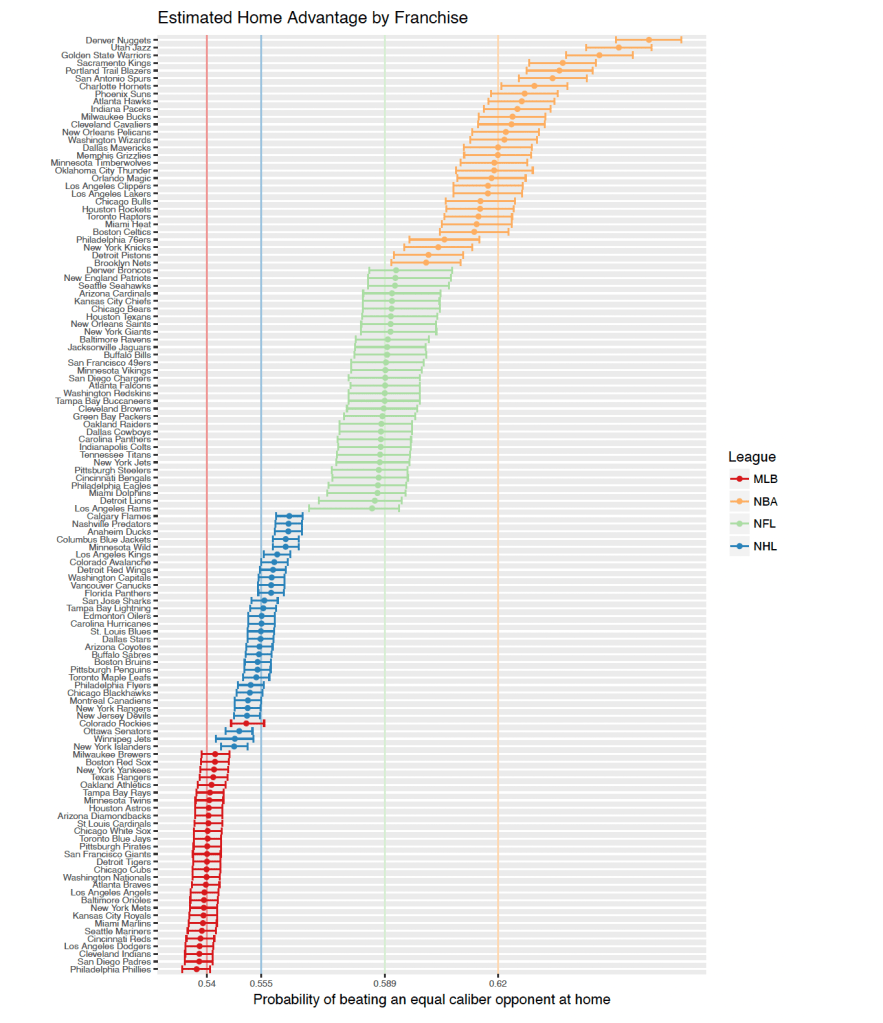

Home advantage

This plot is awesome.

Read the full paper: https://projecteuclid.org/journals/annals-of-applied-statistics/volume-12/issue-4/How-often-does-the-best-team-win-A-unified-approach/10.1214/18-AOAS1165.full

Cheers.

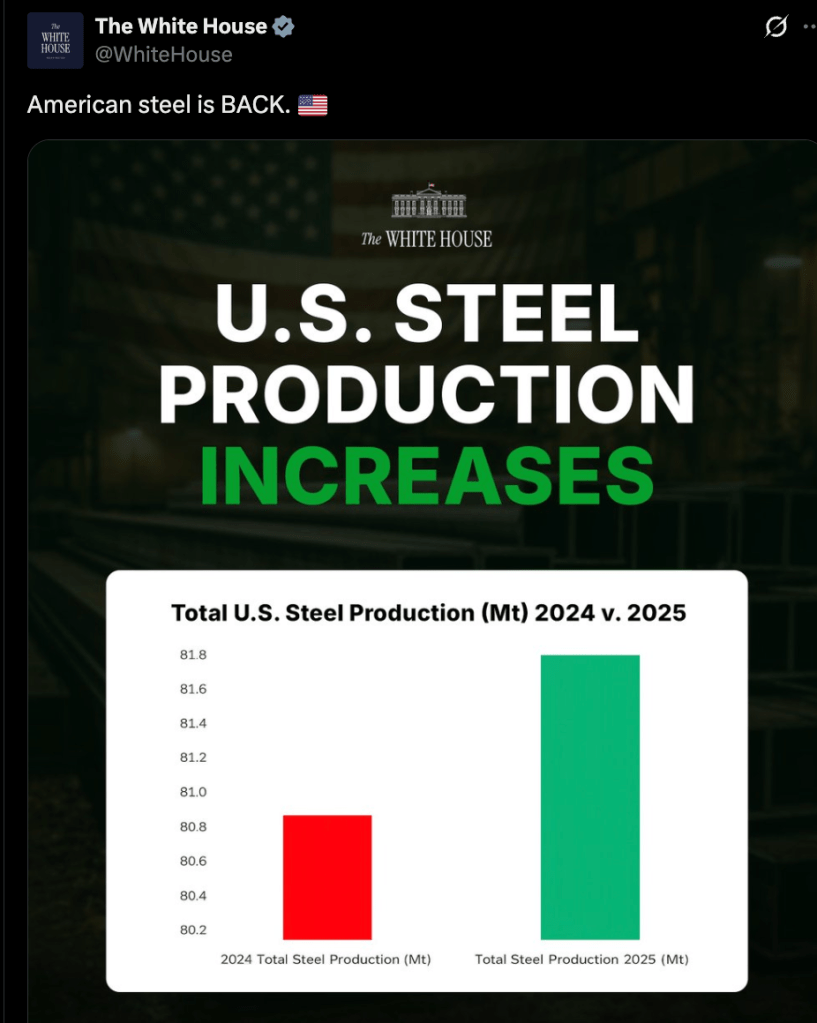

Lies, Damn Lies, and Trump Stats

The White House just posted this tweet:

The “readers added context” gets this correct as a misrepresentation. It’s actually only a 1.2% increase. The issue is a truncated y-axis. Don’t do this with bar charts!

Preserved here if this ever gets taken down:

Boy, this Donald Trump fellow sure does seem dishonest.

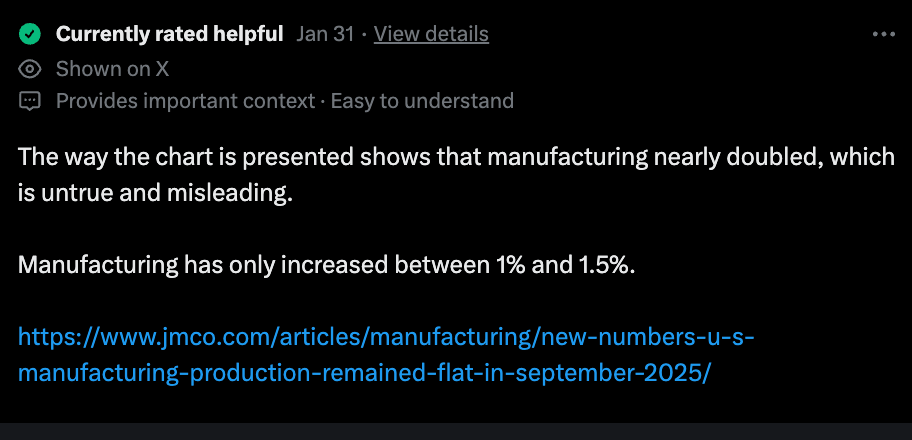

This reminds me of the famous Fox News graph “If the Bush Tax Cuts Expire”.

Man, it’s almost like all this dishonesty is coming from one side and they are doing it on purpose……

Cheers.

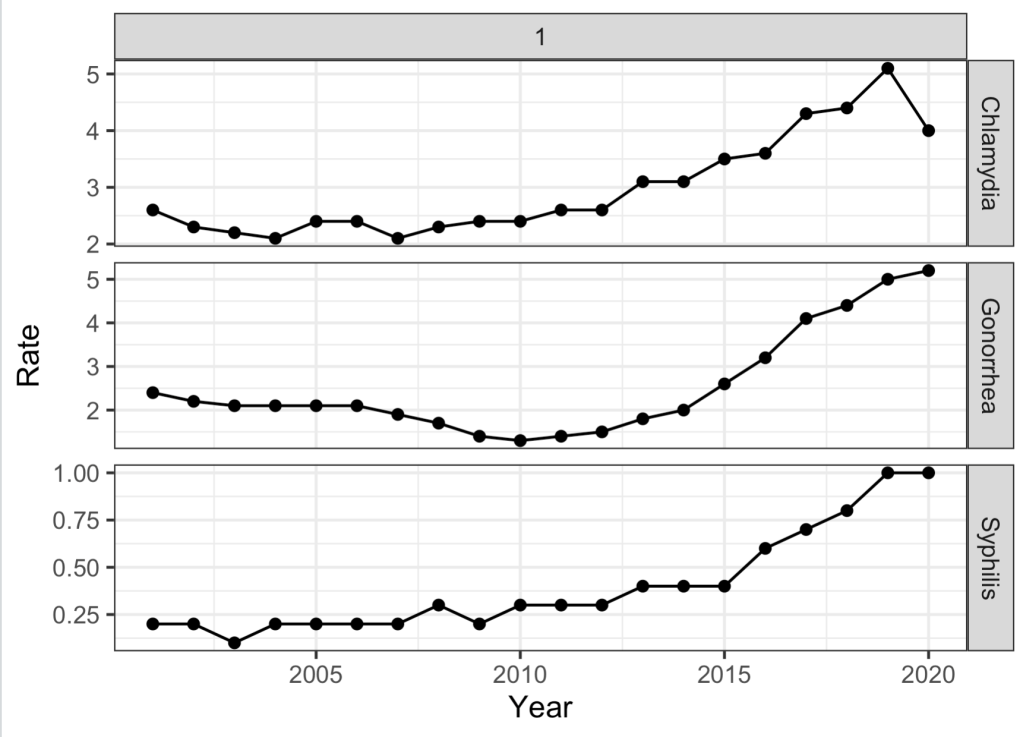

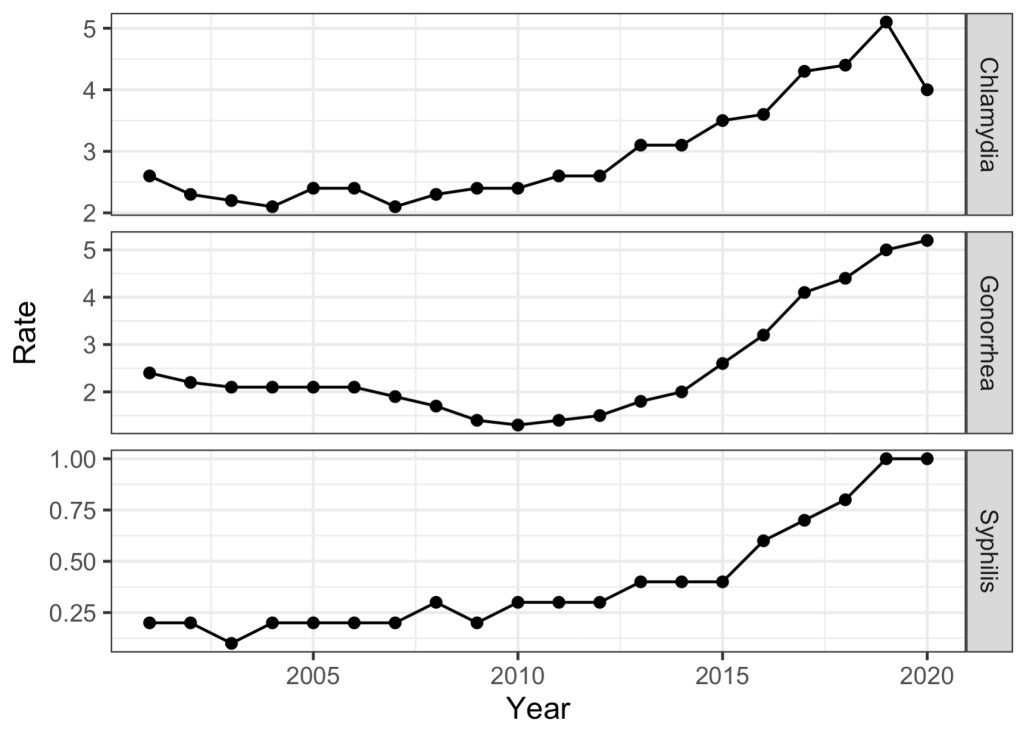

TIL: facet_grid edition

Today I learned how to get rid of the pesky “1” on the top of a ggplot facet_grid if I want to stack the plots vertically. Use facet_grid(rows = vars(group)). See my example below:

#Ugh. Why is there a 1?rbind(PSold %>% filter(Age == "65+") %>% mutate(STI = "Syphilis"), GCold %>% filter(Age == "65+") %>% mutate(STI = "Gonorrhea"), CTold %>% filter(Age == "65+") %>% mutate(STI = "Chlamydia")) %>% ggplot(aes(x = Year, y = Rate)) + geom_point() + geom_line() + theme_bw() + facet_grid(STI~1, scale = "free_y") #Get rid of the pesky 1 rbind(PSold %>% filter(Age == "65+") %>% mutate(STI = "Syphilis"), GCold %>% filter(Age == "65+") %>% mutate(STI = "Gonorrhea"), CTold %>% filter(Age == "65+") %>% mutate(STI = "Chlamydia")) %>% ggplot(aes(x = Year, y = Rate)) + geom_point() + geom_line() + theme_bw() + facet_grid(rows = vars(STI), scale = "free_y")

Cheers.

The University of Nebraska Board of Regents is a dumpster fire

When I was a kid, I used to think that if someone was in a high up position that they must be really smart and competent. Now that I’m in my 40s, it seems like it’s almost the opposite. Most of the people I meet or read about who are in positions of leadership, I’m wildly unimpressed by. But I could not possibly imagine the level of incompetence of the Board of Regents for the University of Nebraska.

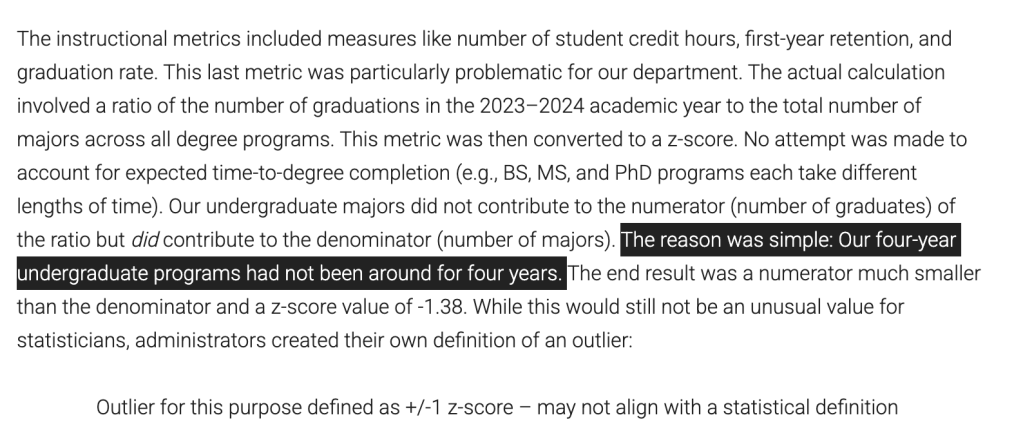

They recently cut their statistics program along with a few others based on metrics showing these program were underperforming, but there really weren’t many details of what these metrics were or how they were computed. However, AMSTAT News recently published an interview with two professors from the University of Nebraska-Lincoln on what happened (You can read the full article here). In that article, they detail some of the flaws in the calculation of these metrics, but one of these flaws stands out above all the others as the peak of dip shittery.

So, one of the metrics the Board of Regents used in their calculation was graduation rate. Graduation rate is the number of graduates divided by number of majors. Simple enough. Now for the statistics department, this number was 0%. Why? Because the department hadn’t yet existed for 4 years.

Here is the relevant paragraph from the AMSTAT article:

And just as a nice added middle-finger to the statistics department, the Board of Regents decided to make their own definition of what an outlier is.

There is no nice way to say this: these people are world class unimpressive.

Cheers.

AI fails again

I was trying to fit a mixed effects model and I waned to compare the model with the same fixed effects with and without a random intercept in an ANOVA. So I wasn’t sure how to do this using lmer from the lme4 package. So I googled it:

It gave me the following answer with code:

# Example data

data <- data.frame(

response = rnorm(100),

fixed_effect_1 = rnorm(100),

fixed_effect_2 = rnorm(100)

)

# Fit a linear mixed model with no random effects

model <- lmer(response ~ fixed_effect_1 + fixed_effect_2, data = data)

# Display the model summary

summary(model)

I was pretty sure this wouldn't work, but I tried it anyway. And it doesn't work. You can't fit an lmer model without specifying random effects.

For the record, I’m not sure my question even makes sense. I think I should be comparing these models with AIC rather than ANOVA, now that I think about it for more than a second.

Anyway,

Cheers.

However bad you think the Rockies are, they are worse. Even worse than the White Sox last year (so far).

Update: They swept the Marlins and are now on a 3 game winning streak and sit at 12-50. They are 25 games out of first place with 100 games to play.

Update: They won last night and are now 10-50 as of noon on June 3.

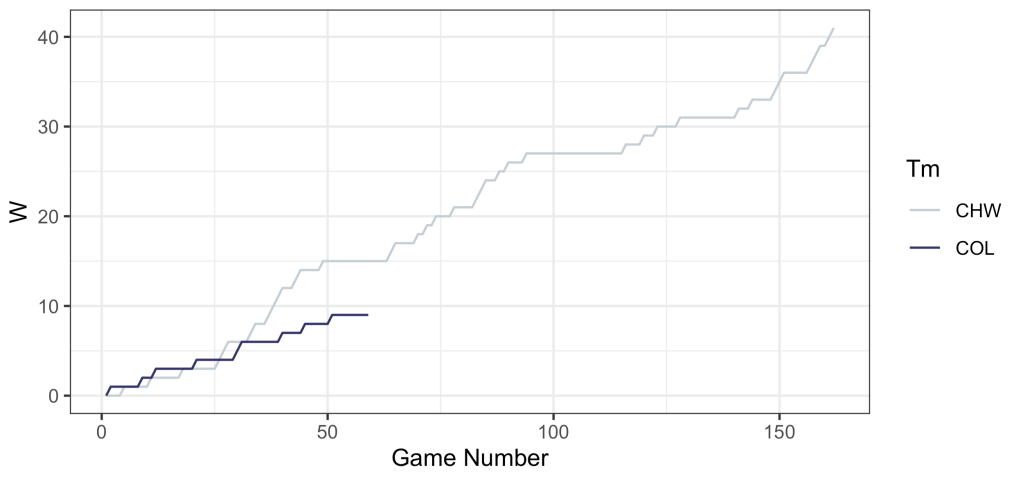

The Rockies are 9-50. That’s 50 losses in 59 games. A winning percentage of .153. That’s the fastest any team has gotten to 50 losses since 1901. But didn’t we just get a new worst ever team last year in the Chicago White Sox? Yup! The Chicago White Sox lost 121 games last year, the most ever. And the Rockies are currently on pace to shat. ter. the White Sox loss record from last year. They are currently on pace to go 25-137. How bad is this? At this point in the season, the White sox were 15-44. SIX games ahead of where the Rockies are right now. To see this, let’s look at some data viz that I made.

Below is a plot of game number versus cumulative wins. Up until about game 30 the Rockies were merely just as bad as the White Sox. Since then they have really stepped down their game thanks to multiple 8 game losing streaks. Speaking of streaks, let’s take a look at that.

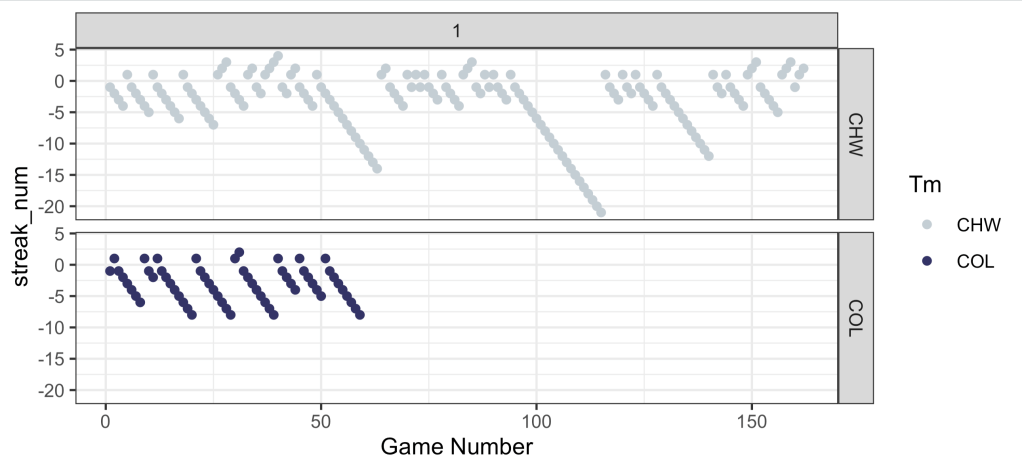

Below, you’ll find a plot of game number on the x axis and the win/loss streak on the y-axis. Positive numbers are winning streaks and negative numbers are losing streaks. You can see that the Rockies have already had FOUR 8 game losing streaks. But even more impressive than that is that they’ve only won back to back games a single time this season. Let me repeat this: Their largest win streak of the season is 2 games and it’s happened exactly once. The White Sox last year has winning streak of 2 or more 9 times total and 4 up to this point in the season,. They even had a nifty little 4 game winning streak (They also had losing streaks of 12, 14, and 21(!!!) games).

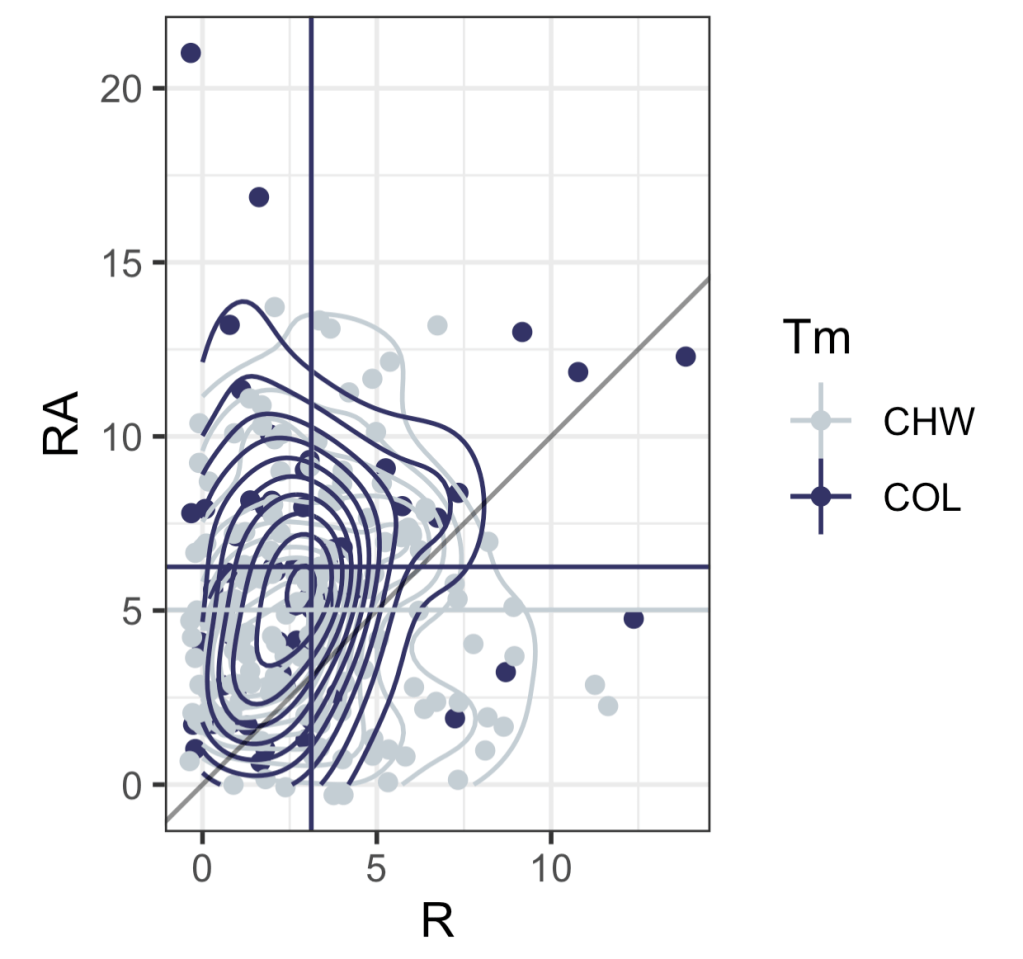

Now let’s take a look at scoring for the Rockies. Below is a 2d contour plot of for the runs for and runs against for every game from the White Sox 2024 season in the left panel and every game of the current Rockies season. The horizontal and vertical lines are the mean number of runs for and against and the diagonal lines shows whether the game was won or lost by the respective team (above the line is a loss, below the line if a win). What’s really interesting about this is that in the entire season last year, the White Sox only score 10 or more runs TWICE. The Rockies have already done this 3 times. Also, the most runs that the White Sox gave up all season was 14 runes. The Rockies have given up more than 14 runs twice already. (a 17-2 loss to the Brewers and a 21-0 loss to the Padres).

Here is what these plots look like on top of one another. What you’ll notice is that it’s hard to see the vertical line for the White Sox (i.e. their mean number of runs) because it’s nearly identical to the Rockies. The White Sox average 3.13 runs per game last year and the Rockies are just slightly below that at 3.12 runs per game. But where Colorado really “shines” is their defense. While the White Sox gave up an average of just over 5 runs per game (5.02, to be exact), the Rockies are currently allowing, and I can’t believe this is true, 6.25 runs per game. That 1.23 more runs per game on average than the worst team in the modern history of baseball. Incredible work.

If you’re a median type of person, I computed those two. The White Sox last year scored a median of 3 runs and allowed a median of 5 runs. For the Rockies this year, they are scoring a median of 2 runs and allowing a median of 6 runs.

Anyway, the point is that the Rockies are [really]+ bad.

My code is below.

Cheers.

rock <- read.csv("/Users/gregorymatthews/Dropbox/statsinthewild/rockies2025_20250602.csv")

ws <- read.csv("/Users/gregorymatthews/Dropbox/statsinthewild/whitesox2024.csv")

names(rock)[1] <- names(ws)[1] <- "gameno"

rock$streak_num <- as.numeric(paste0(substring(rock$Streak,1,1),nchar(rock$Streak)))

rock$W <- as.numeric(unlist(lapply(strsplit(rock$W.L.1,"-"),function(x){x[1]})))

rock$L <- as.numeric(unlist(lapply(strsplit(rock$W.L.1,"-"),function(x){x[2]})))

ws$streak_num <- as.numeric(paste0(substring(ws$Streak,1,1),nchar(ws$Streak)))

ws$W <- as.numeric(unlist(lapply(strsplit(ws$W.L.1,"-"),function(x){x[1]})))

ws$L <- as.numeric(unlist(lapply(strsplit(ws$W.L.1,"-"),function(x){x[2]})))

both <- rbind(rock,ws)

library(tidyverse)

library(teamcolors)

small <- teamcolors %>% filter(name %in% c("Chicago White Sox","Colorado Rockies"))

ggplot(aes(x = gameno, y = W,color = Tm), data =both) +

geom_path() +

theme_bw() +

scale_color_manual(values = c(small$secondary[1],small$primary[2])) +

xlab("Game Number")

ggplot(aes(x = gameno, y = streak_num, col = Tm), data = both) + geom_point() + theme_bw() + facet_grid(Tm~1) + scale_color_manual(values = c(small$secondary[1],small$primary[2])) + xlab("Game Number")

ggplot(aes(x = R, y = RA, color = Tm), data = both) +

geom_jitter() +

geom_density2d() +

scale_color_manual(values = c(small$secondary[1],small$primary[2])) +

theme_bw() + geom_abline(slope = 1, color = rgb(0,0,0,.5)) +

geom_vline(aes(xintercept = R, color = Tm), data = both %>% group_by(Tm) %>% summarise(R = mean(R),RA = mean(RA))) +

geom_hline(aes(yintercept = RA, color = Tm), data = both %>% group_by(Tm) %>% summarise(R = mean(R),RA = mean(RA))) + coord_fixed() +

both %>% group_by(Tm) %>% summarise(median(R),median(RA))

both %>% group_by(Tm) %>% summarise(mean(R),mean(RA))

both %>% filter(Tm == "COL") %>% pull(R) %>% table()

both %>% filter(Tm == "CHW") %>% pull(R) %>% table()

Kaggle Probs for sweet sixteen games.

Men’s

Alabama over BYU – 72.1%

Duke over Arizona – 90.3%

Michigan St. over Ole Miss – 78.0%

Tennessee over Kentucky – 76.3%

Florida over Maryland – 90.3%

Texas Tech over Arkansas – 62.4%

Auburn over Michigan – 77.0%

Houston over Michigan – 77.0%

Women’s

South Carolina over Maryland – 96.2%

Duke over North Carolina – 72.1%

UCLA over Ole Miss – 95.8%

LSU over NC State – 29.6%

Texas over Tennessee – 91.8%

TCU over Notre Dame – 50.0%

USC over Kansas St. – 96.2%

UConn over Oklahoma – 96.2%

Cheers.

Kaggle Probs for today’s games – 3/22/2025

Women

Iowa: 80.8%

UConn: 100%

Alabama: 88.5%

West Vriginia: 86.4%

NC State: 100%

Oklahoma: 100%

USC: 100%

Oklahoma St: 72.8%

Maryland: 91.3%

Michigan St: 66.4%

North Carolina: 100%

California: 55.7%

Illinois: 55.7%

Florida St: 66.4%

Texas: 100%

LSU: 100%

Men

Purdue: 58.3%

St. John’s: 82.4%

Texas A&M: 50.0%

Texas Tech: 62.4%

Auburn: 78.3%

Wisconsin: 51.0%

Houston: 73.9%

Tennessee: 82.4%

Cheers.